Publications

Journal Papers

1. Karim, M.M. , Qin, R., Wang, Y. (2024). Fusion-GRU: A Deep Learning Model for Future Bounding Box Prediction of Traffic Agents in Risky Driving Videos, Transportation Research Record , DOI

2. Karim, M.M. , Qin, R., Yin, Z. (2023). An Attention-guided Multistream Feature Fusion Network for Localization of Risky Objects in Driving Videos, IEEE Transaction on Intelligent Vehicles, doi: 10.1109/TIV.2023.3275543. Preprint | Code

3. Karim, M.M. ,Li, Y., Qin, R., Yin, Z. (2022). A dynamic spatial-temporal attention network for early anticipation of traffic accidents. IEEE Transaction on Intelligent Transportation Systems vol. 23, no. 7, pp. 9590-9600. Preprint | Code

4. Karim, M.M., Li, Y., Qin, R.(2022). Towards explainable artificial intelligence (XAI) for early anticipation of traffic accidents. Transportation Research Record, 2676(6), 743-755. Preprint | Code

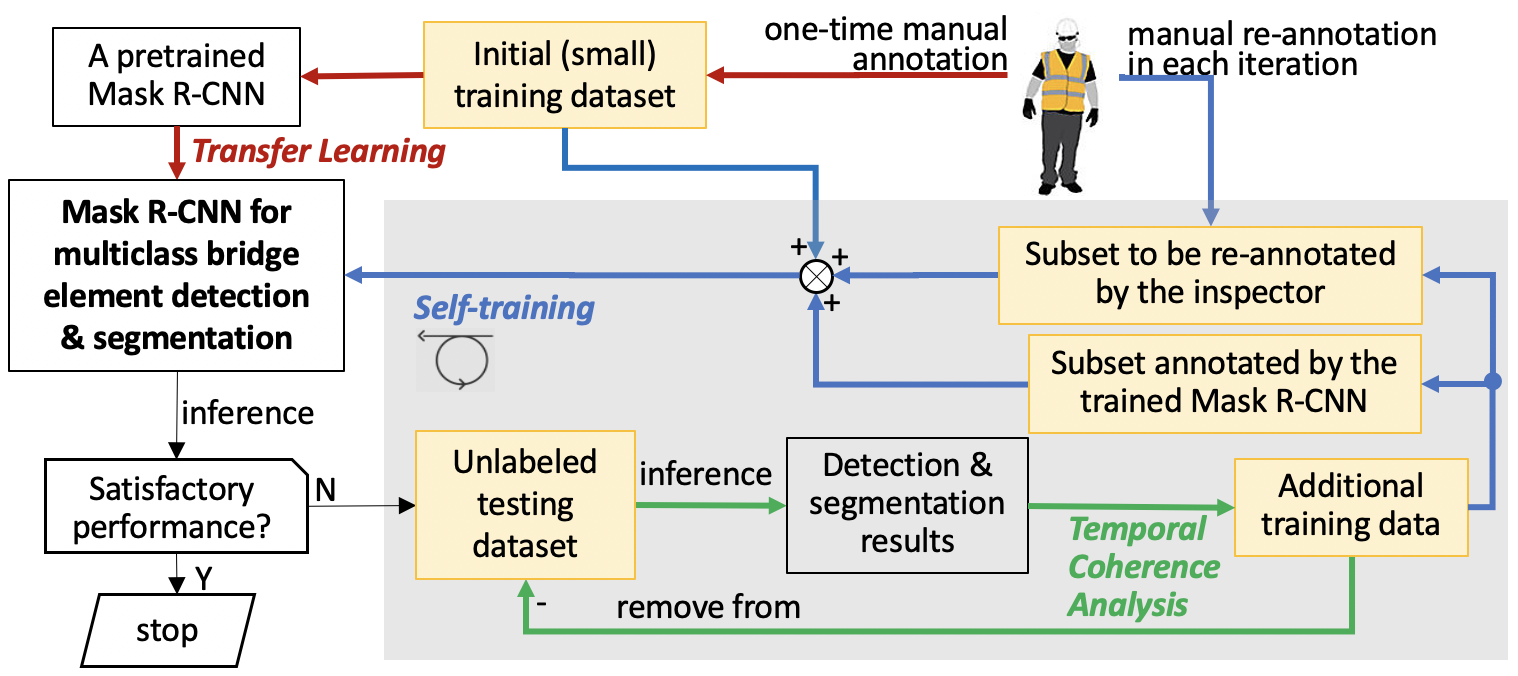

5. Karim, M.M. , Qin, R., Yin, Z., & Chen, G. (2021). A semi-supervised self-training method to develop assistive intelligence for segmenting multiclass bridge elements from inspection videos. Structural Health Monitoring, 21(3), 835-852. Preprint | Code

6. Li, Y., Karim, M.M., Qin, R., Sun, Z., Wang, Z., Yin, Z. (2021). Crash report data analysis for creating scenario-sise, spatio-temporal attention guidance to support computer vision-based perception of fatal crash risks. Accident Analysis and Prevention.,151, pp. 105962. Preprint

Peer-Reviewed Conference Papers

1. Karim, M.M., Li, Y., Qin, R., Yin, Z. (2021). A system of vision sensor based deep neural networks for complex driving scene analysis in support of crashrisk assessment and prevention, The 100th Transportation Research Board(TRB) Annual Meeting, Virtual Meeting, January 5-29,2021. Preprint | Code

2. Karim, M.M., Dagli, CH. (2020). Sos meta-architecture selection for infrastructure inspection system using aerial drones. In Proceeding of the 15th IEEE International Symposium on System of Systems Engineering (SoSE 2020). Budapest, Hungary. June 2-4, 2020. DOI

3. Karim, M.M., Dagli, CH., & Qin, R. (2019). Modeling and simulation of a robotic bridge inspection system. In Proceedings of the 2019 Complex Adaptive Systems Conference (CAS’19). Malvern, PA. November 13-15, 2019. DOI

4. Karim, M.M., Doell, D., Lingard, R., Yin, Z., Leu, MC., & Qin, R. (2019). A region-based deep learning algorithm for detecting and tracking objects in manufacturing plants. In Proceedings of the 25th International Conference on Production Research (ICPR’19). Chicago, IL. August 9-14, 2019. DOI | Code

Technical Reports

1. Qin, R., Chen, G., Long, S.K., Yin, Z., Louis, S. Karim, M.M. , Zhao, T., (2020). A training framework of robotic operation and image analysis for decision-making in bridge inspection and preservation (Technical Report INSPIRE-006). USDOT INSPIRE University Transportation Center. Website

2. Qin, R., Yin, Z., Karim, M.M. , Li, Y., Wang, Z. (2020). Crash prediction and avoidance by identifying and evaluating risk factors from onboard cameras (Technical Report 25-1121-0005-135-2). USDOT MATC University Transportation Center. Download